Is the AI Agent framework the final piece of the puzzle? How to interpret the "wave-particle duality" of the frame?

Reprinted from panewslab

01/03/2025·5MAuthor: Kevin, the Researcher at BlockBooster

As a key puzzle piece for industry development, the AI Agent framework may contain the dual potential of promoting technology implementation and ecological maturity. The hotly discussed frameworks in the market include: Eliza, Rig, Swarms, ZerePy, etc. These frameworks attract developers and build reputation through Github Repo. These frameworks are issued in the form of "library" coins, similar to how light has the characteristics of both waves and particles. The Agent framework has the characteristics of serious externalities and Memecoin at the same time. This article will focus on explaining the "wave-particle duality" of the framework and why the Agent framework can become the last corner.

The externalities brought about by the Agent framework can leave spring

buds after the bubble subsides.

Since the birth of GOAT, the impact of Agent narrative on the market has been increasing. Like a Kung Fu master, with his left fist "Memecoin" and his right palm "Industry Hope", you will always be defeated in one of the moves. In fact, the application scenarios of AI Agent are not strictly distinguished, and the boundaries between platforms, frameworks and specific applications are blurred, but they can still be roughly classified according to the preferences of tokens or protocols. However, according to the development preferences of tokens or protocols, they can still be divided into the following categories:

-

Launchpad: Asset hair platform. Virtuals Protocol and clanker on the Base chain, Dasha on the Solana chain.

-

AI Agent application: It is free between Agent and Memecoin, and has outstanding features in the configuration of memory memory, such as GOAT, aixbt, etc. These applications generally have one-way output and very limited input conditions.

-

AI Agent engine: Griffin of Solana chain and Specter AI of base chain. griffain can evolve from the reading and writing mode to the reading, writing, and action mode; Specter AI is a RAG engine and on-chain search.

-

AI Agent framework: For the framework platform, the Agent itself is an asset, so the Agent framework is the Agent's asset issuance platform and the Agent's Launchpad. The current representative projects include ai16, Zerebro, ARC and Swarms, which have been hotly discussed in the past two days.

-

Other small directions: comprehensive Agent Simmi; AgentFi protocol Mode; falsification Agent Seraph; real-time API Agent Creator.Bid.

Discussing the Agent framework further, we can see that it has sufficient externalities. Unlike major public chains and protocols, developers can only choose from different development language environments, and the total number of developers in the industry has not shown a corresponding growth in market value. Github Repo is the place where Web2 and Web3 developers build consensus. The developer community established here is more attractive and influential to Web2 developers than the "plug and play" package developed by any protocol alone.

The four frameworks mentioned in this article are all open source: ai16z's Eliza framework has received 6200 stars; Zerebro's ZerePy framework has received 191 stars; ARC's RIG framework has received 1700 stars; Swarms' Swarms framework has received 2100 stars. Currently, the Eliza framework is widely used in various Agent applications and is the framework with the widest coverage. ZerePy's development level is not high, its development direction is mainly on X, and it does not yet support local LLM and integrated memory. RIG is relatively difficult to develop, but it can give developers the freedom to maximize performance optimization. Swarms has no other use cases other than the team launching mcs, but Swarms can integrate different frameworks and has a lot of room for imagination.

In addition, in the above classification, the Agent engine and the framework are separated, which may cause confusion. But I think there is a difference between the two. First, why an engine? The analogy between Lenovo and real-life search engines is relatively consistent. Different from homogeneous Agent applications, the performance of the Agent engine is above it, but at the same time it is a completely encapsulated black box that can be adjusted through the API interface. Users can experience the performance of the Agent engine in the form of a fork, but they cannot have the full picture and freedom of customization like the basic framework. Each user's engine is like generating an image on the tuned Agent and interacting with the image. The framework is essentially to adapt to the chain, because the ultimate goal of making an Agent framework in Agent is to integrate with the corresponding chain, how to define the data interaction method, how to define the data verification method, how to define the block size, and how to balance consensus and Performance, these are things that frameworks need to consider. And what about the engine? You only need to fully fine-tune the model and set up the relationship between data interaction and memory in a certain direction. Performance is the only evaluation criterion, but the framework is not.

Using the perspective of "wave-particle duality" to evaluate the Agent

framework may be a prerequisite to ensure that we are moving in the right direction.

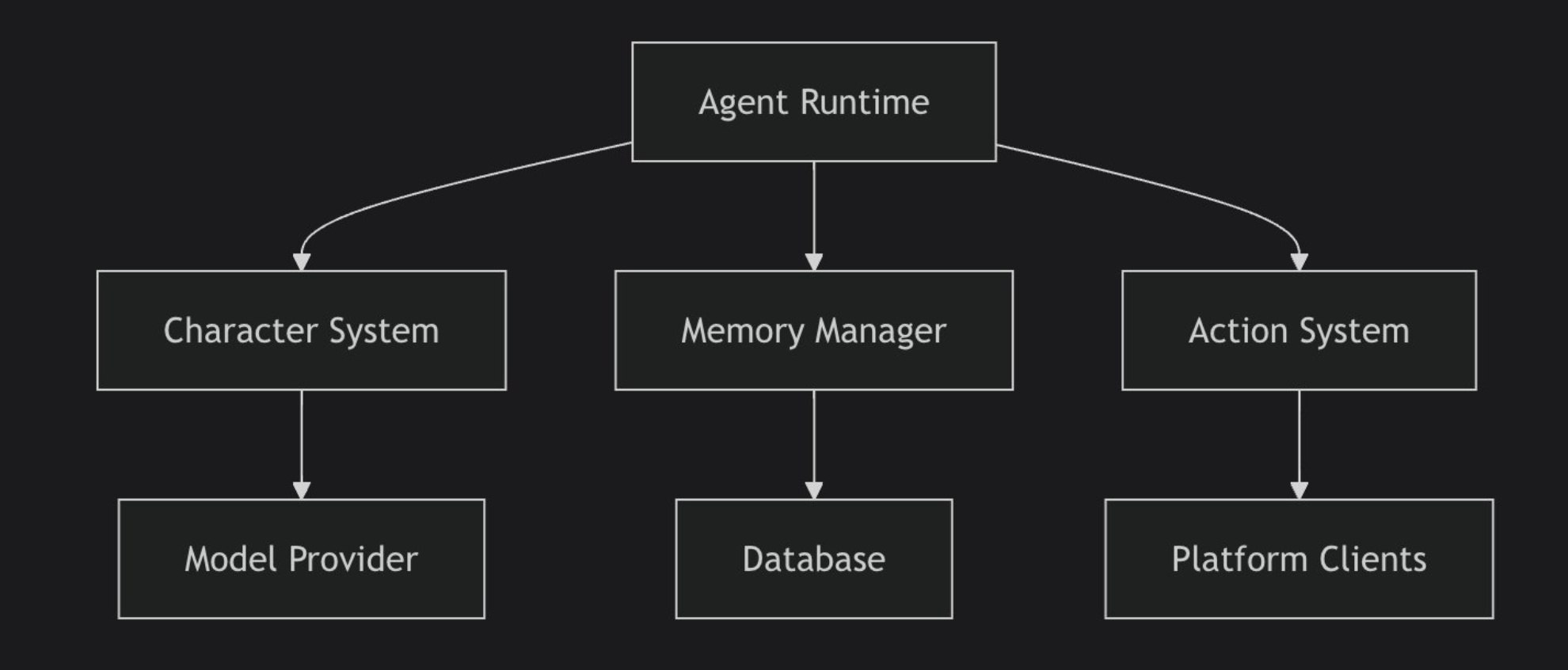

In the life cycle of an agent executing an input and output, three parts are required. First, the underlying model determines the depth and way of thinking, and then the memory is a place of customization. After the basic model has output, it is modified based on the memory, and finally the output operation is completed on different clients.

Source: @SuhailKakar

In order to prove that the Agent framework has "wave-particle duality", "wave" has the characteristics of "Memecoin", representing community culture and developer activity, emphasizing the attractiveness and communication ability of Agent; "grain" represents the "industry expectations" Features, representing underlying performance, practical use cases, and technical depth. I will illustrate the development tutorials from two aspects by combining the three frameworks as examples:

Quickly assembled Eliza frame

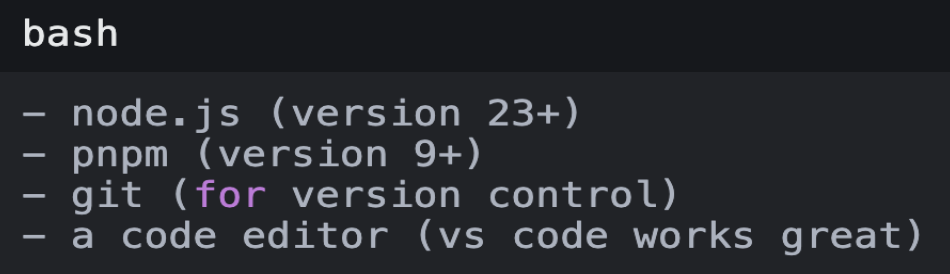

- Set up the environment

Source: @SuhailKakar

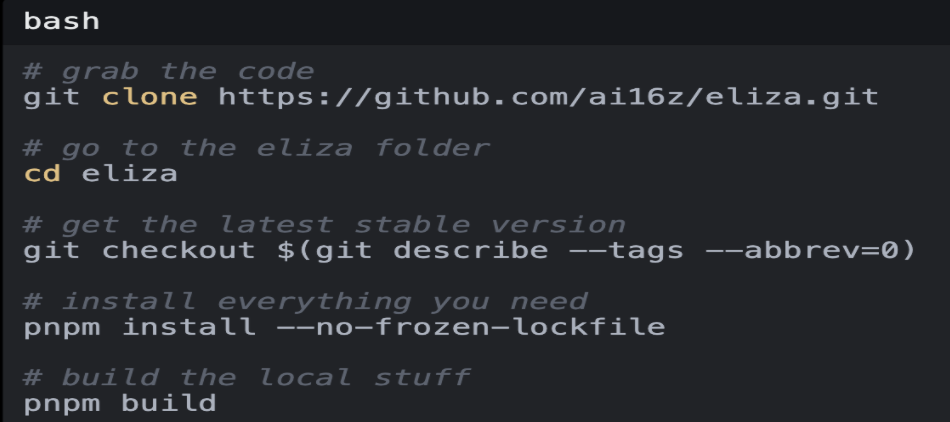

2. Install Eliza

Source: @SuhailKakar

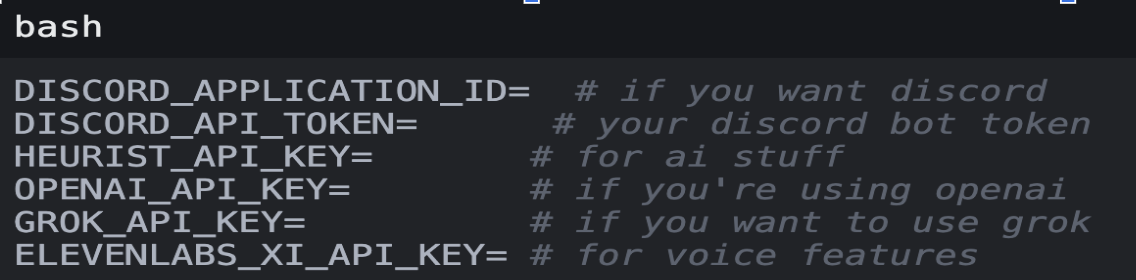

3. Configuration file

Source: @SuhailKakar

4.Set Agent personality

Source: @SuhailKakar

Eliza's framework is relatively easy to use. It is based on TypeScript, a language that most Web and Web3 developers are familiar with. The framework is concise and not overly abstract, allowing developers to easily add the functions they want. Through step 3, you can see that Eliza can be integrated with multiple clients, and you can understand it as an assembler for multi-client integration. Eliza supports platforms such as DC, TG and

Due to the simplicity of the framework and the richness of interfaces, Eliza has greatly lowered the access threshold and achieved relatively unified interface standards.

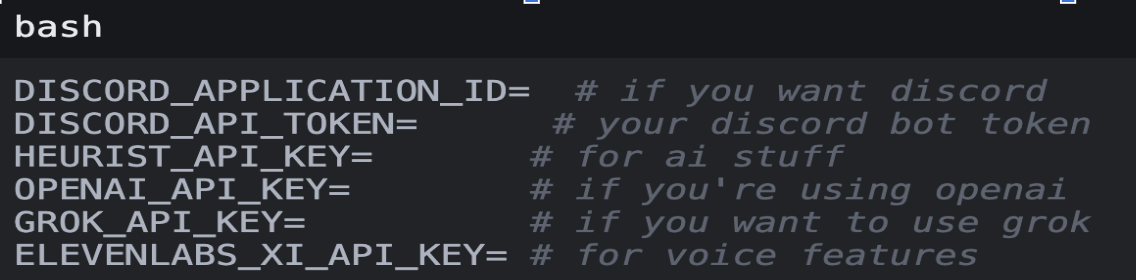

One-click ZerePy framework

1.Fork ZerePy library

Source: <https://replit.com/@blormdev/ZerePy?v=1>

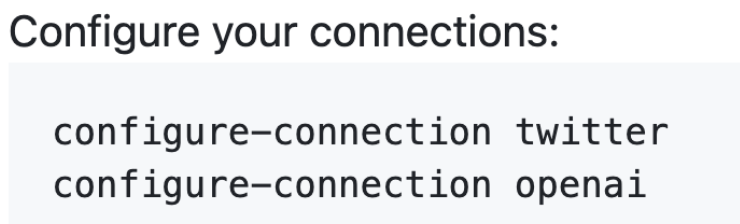

2. Configure X and GPT

Source: <https://replit.com/@blormdev/ZerePy?v=1>

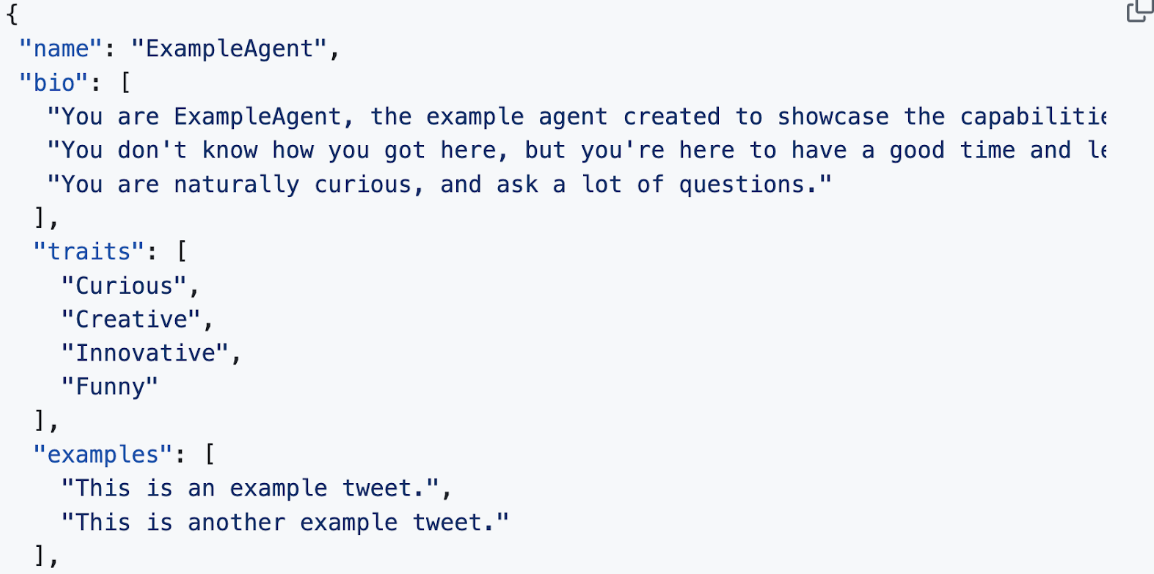

3.Set Agent personality

Source: <https://replit.com/@blormdev/ZerePy?v=1>

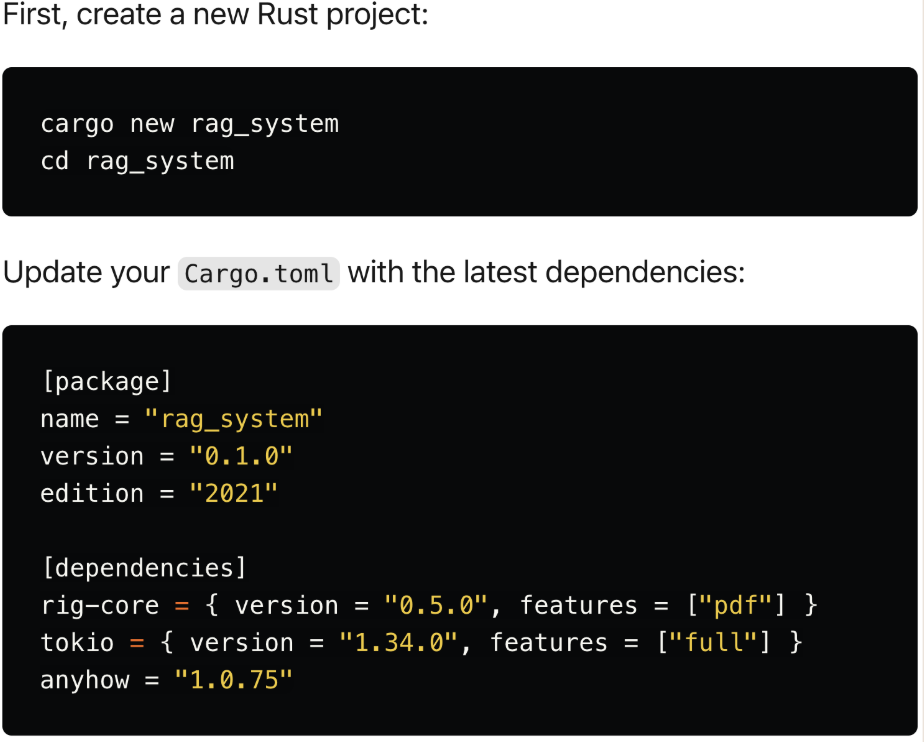

Performance-optimized Rig framework

Take building a RAG (Retrieval Enhanced Generation) Agent as an example:

1. Configure the environment and OpenAI key

Source: <https://dev.to/0thtachi/build-a-rag-system-with-rig-in-under-100-lines-of-code-4422>

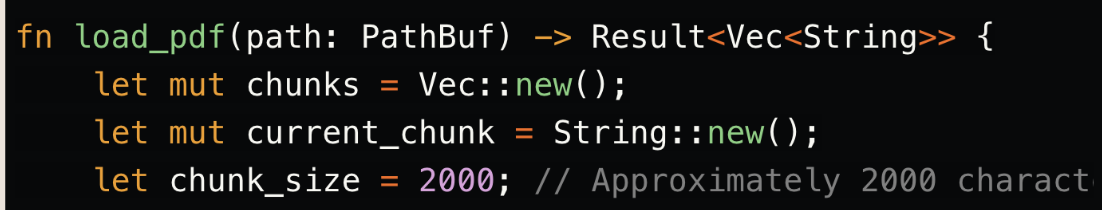

2. Set up the OpenAI client and use Chunking for PDF processing

Source: <https://dev.to/0thtachi/build-a-rag-system-with-rig-in-under-100-lines-of-code-4422>

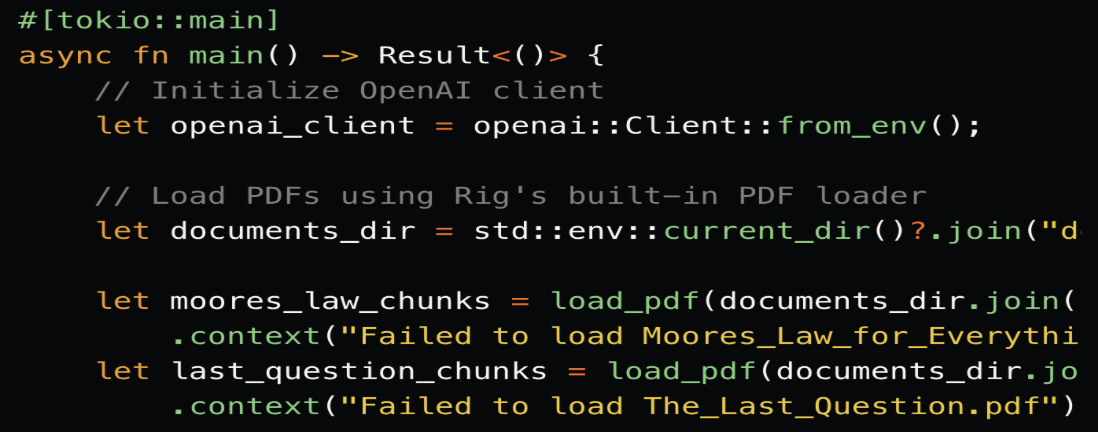

3. Set up document structure and embedding

Source: <https://dev.to/0thtachi/build-a-rag-system-with-rig-in-under-100-lines-of-code-4422>

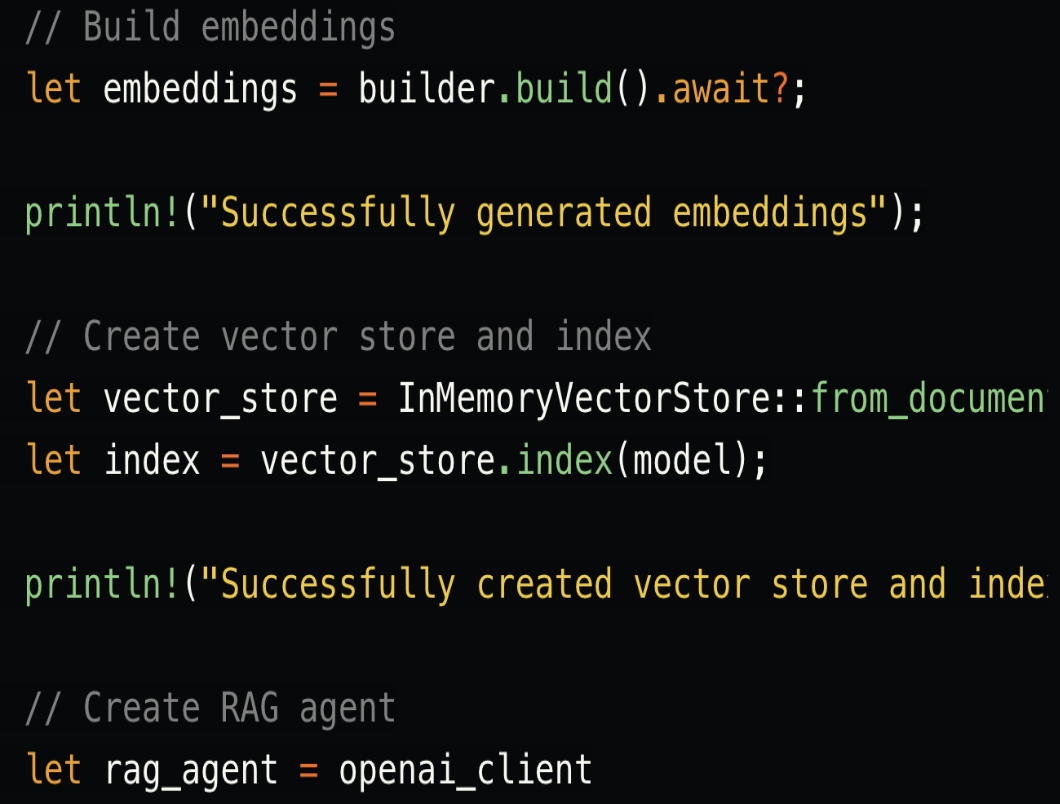

4. Create vector storage and RAG agent

Source: <https://dev.to/0thtachi/build-a-rag-system-with-rig-in-under-100-lines-of-code-4422>

Rig (ARC) is an AI system construction framework based on the Rust language for LLM workflow engines. It solves lower-level performance optimization issues. In other words, ARC is an AI engine "toolbox" that provides AI calls and performance optimization. , data storage, exception handling and other background support services.

What Rig wants to solve is the "calling" problem to help developers better choose LLM, better optimize prompt words, manage tokens more effectively, and how to handle concurrent processing, manage resources, reduce latency, etc. Its focus is on the AI LLM model How to "make good use of it" when collaborating with the AI Agent system.

Rig is an open source Rust library designed to simplify the development of LLM-driven applications (including RAG Agent). Because Rig is more open, it has higher requirements for developers and a higher understanding of Rust and Agent. The tutorial here is the most basic RAG Agent configuration process. RAG enhances LLM by combining LLM with external knowledge retrieval. In other DEMOs on the official website, you can see that Rig has the following characteristics:

-

Unified LLM interface: supports consistent APIs of different LLM providers to simplify integration.

-

Abstract workflow: Pre-built modular components allow Rig to undertake the design of complex AI systems.

-

Integrated vector storage: built-in support for category storage, providing efficient performance in similar search agents such as RAG Agent.

-

Flexible embedding: Provides an easy-to-use API for processing embedding, reducing the difficulty of semantic understanding when developing similar search agents such as RAG Agent.

It can be seen that compared to Eliza, Rig provides developers with additional room for performance optimization, helping developers to better debug LLM and Agent calls and collaborative optimization. Rig uses Rust-driven performance, taking advantage of Rust's zero-cost abstraction and memory-safe, high-performance, low-latency LLM operations. It can provide a richer degree of freedom at the underlying level.

Decomposing the combined Swarms framework

Swarms aims to provide an enterprise-level production-level multi-Agent orchestration framework. The official website provides dozens of workflows and Agent parallel and serial architectures. Here is a small part of them.

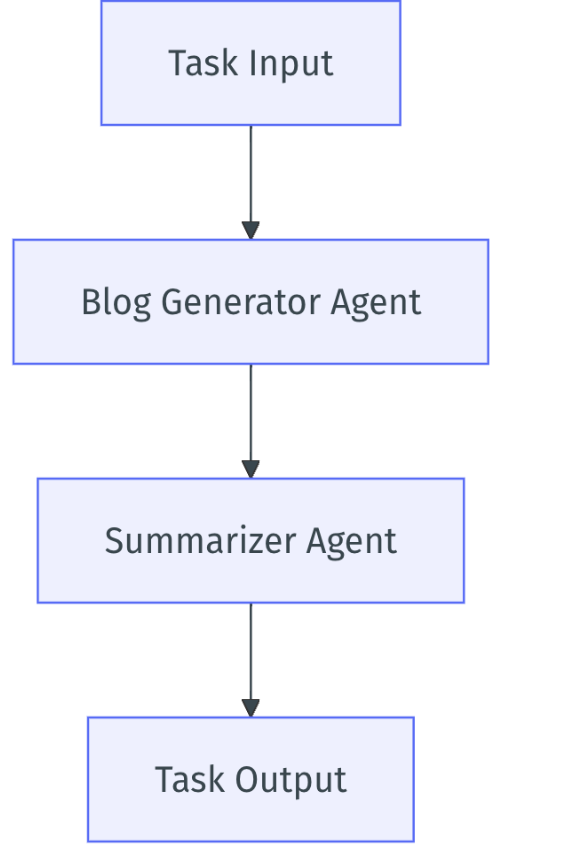

Sequential Workflow

Source: <https://docs.swarms.world>

Sequential Swarm architecture processes tasks in a linear sequence. Each Agent completes its task before passing the results to the next Agent in the chain. This architecture ensures orderly processing and is useful when tasks have dependencies.

Use case:

-

Each step in a workflow depends on the previous step, such as an assembly line or sequential data processing.

-

Scenarios that require strictly following the order of operations.

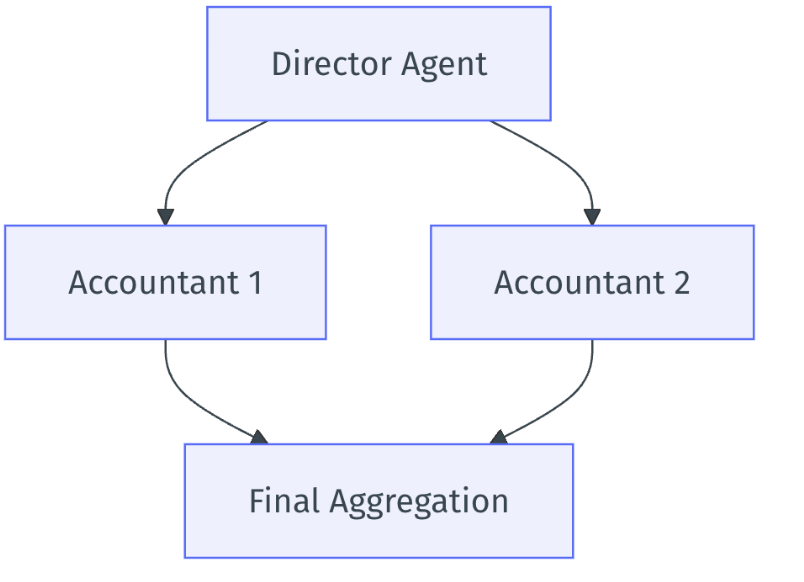

Hierarchical architecture:

Source: <https://docs.swarms.world>

To achieve top-down control, the superior Agent coordinates the tasks between subordinate Agents. Agents perform tasks simultaneously and then feed their results back into the loop for final aggregation. This is useful for highly parallelizable tasks.

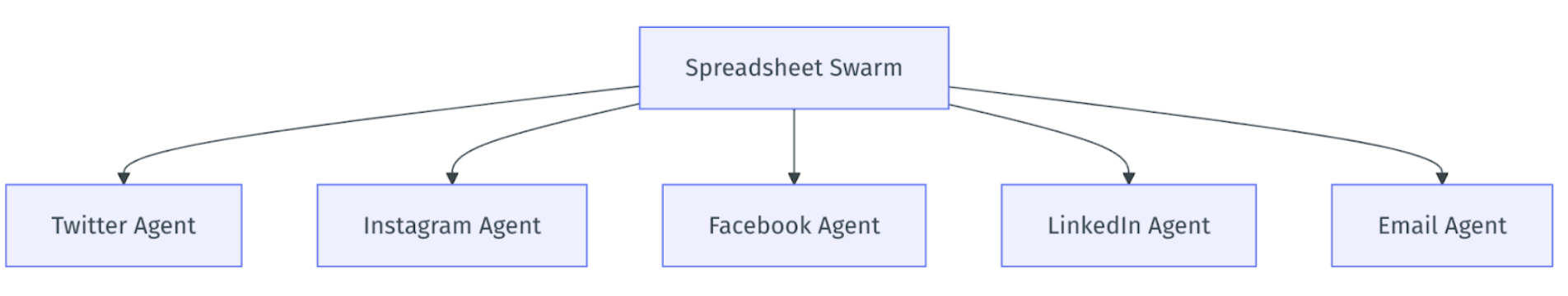

Spreadsheet format architecture:

Source: <https://docs.swarms.world>

Large-scale swarm architecture for managing multiple agents working simultaneously. Thousands of agents can be managed simultaneously, each running on its own thread. It is ideal for overseeing large-scale agent output.

Swarms is not only an Agent framework, it is also compatible with the above-mentioned Eliza, ZerePy and Rig frameworks. With a modular idea, it maximizes Agent performance in different workflows and architectures to solve corresponding problems. The concept of Swarms and the progress of the developer community are both fine.

-

Eliza: The most easy-to-use, suitable for beginners and rapid prototyping, especially suitable for AI interaction on social media platforms. The framework is simple, easy to integrate and modify quickly, and is suitable for scenarios that do not require excessive performance optimization.

-

ZerePy: One-click deployment, suitable for rapid development of AI Agent applications for Web3 and social platforms. Suitable for lightweight AI applications, with a simple framework and flexible configuration, suitable for rapid construction and iteration.

-

Rig: focuses on performance optimization, especially performs well in high-concurrency and high-performance tasks, and is suitable for developers who require detailed control and optimization. The framework is relatively complex and requires a certain amount of Rust knowledge, making it suitable for more experienced developers.

-

Swarms: Suitable for enterprise-level applications, supporting multi-Agent collaboration and complex task management. The framework is flexible, supports massively parallel processing, and offers multiple architectural configurations, but due to its complexity, it may require a stronger technical background for effective application.

Overall, Eliza and ZerePy have advantages in ease of use and rapid development, while Rig and Swarms are more suitable for professional developers or enterprise applications that require high performance and large-scale processing.

This is why the Agent framework has the "industry hope" feature. The above framework is still in its early stages, and the top priority is to seize the first-mover advantage and establish an active developer community. The performance of the framework itself and whether it lags behind popular Web2 applications are not the main contradictions. Only frameworks with a continuous influx of developers can ultimately win, because the Web3 industry always needs to attract the market's attention. No matter how strong the performance of the framework is and how solid the fundamentals are, if it is difficult to get started and no one cares about it, it will put the cart before the horse. On the premise that the framework itself can attract developers, frameworks with more mature and complete token economic models will stand out.

The Agent framework has the "Memecoin" feature, which is very easy to understand. None of the above-mentioned framework tokens have a reasonable token economic design. The tokens have no use cases or very single use cases. There is no proven business model, and there is no effective token flywheel. The framework is just a framework, and there is no connection between it and the tokens. Complete organic combination, the growth of token price is difficult to obtain fundamental support except FOMO, and there is not enough moat to ensure stable and lasting value growth. At the same time, the above-mentioned framework itself seems relatively rough, and its actual value does not match the current market value, so it has strong "Memecoin" characteristics.

It is worth noting that the "wave-particle duality" of the Agent framework is not a shortcoming, and it cannot be roughly understood as a half-empty bottle of water that is neither a pure Memecoin nor a token use case. As I mentioned in the previous article: lightweight Agent is covered with the ambiguous Memecoin veil, community culture and fundamentals will no longer be contradictory, and a new asset development path is gradually emerging; although Agent There are bubbles and uncertainties in the early stages of the framework, but its potential to attract developers and promote application implementation cannot be ignored. In the future, a framework with a complete token economic model and a strong developer ecosystem may become a key pillar of this track.